|

|

Sad story behind those Studio Ghibli memes

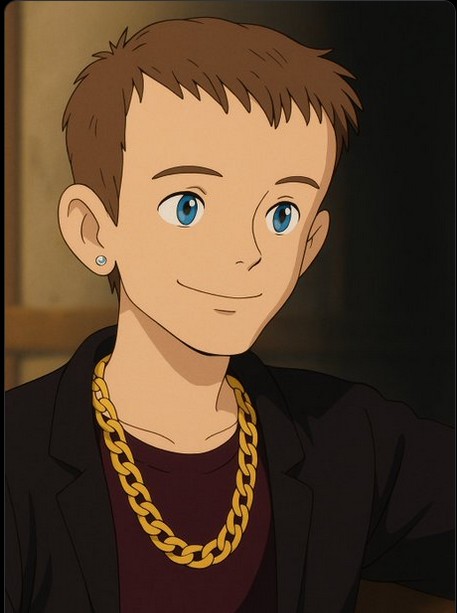

Social media feeds were overrun this week with memes created using OpenAI’s buzzy new GPT-4o image generator in the style of Japanese anime house Studio Ghibli (Spirited Away, Howl’s Moving Castle).

There’s Aussie breakdancer Raygun doing her signature kangaroo move, that image of Ben Affleck looking depressed and sucking on a ciggie, gangster mode Vitalik and thousands more images all captured in the admittedly adorable Studio Ghibli style.

But there’s a much darker side to the trend.

The memes emerged as footage from 2016 resurfaced of studio founder Hayao Miyazaki reacting to an early demo of OpenAI’s image generation capability. He said he was “utterly disgusted” by it and that he would “never wish to incorporate this technology into my work at all.”

Miyazaki added that “a machine that draws pictures like people do” was “an awful insult to life,” and PC Mag reported at the time that afterward he said in sorrow, “We humans are losing faith in ourselves.”

While some might write him off as a Luddite, that would discount the incredible and painstaking attention to detail and craftsmanship that went into each feature film. Studio Ghibli animes contain up to 70,000 hand-drawn images, painted with watercolors. A single four-second clip of a crowd scene from The Wind Rises took one animator 15 months to do.

The chances of anyone funding animators to spend 18 months on a four-second clip seem remote when an AI can generate something similar in seconds. But without new artistic creativity being produced by humans, AI tools will only be able to remix the past rather than create anything new. At least until AGI arrives.

Humans require a ‘modest death event’ to understand AGI risks

Former Google CEO Eric Schmidt believes the only way humanity will wake up to the existential risk of artificial intelligence/artificial general intelligence is via a “modest death event.”

“In the industry, there’s a concern that people don’t understand this and we’re going to have some reasonably — I don’t know how to say this in a not cruel way — a modest death event. Something the equivalent of Chernobyl, which will scare everybody incredibly to understand this stuff,” he said during an event at the recent PARC Forum, as highlighted by X user Vitrupo.

Schmidt said that historic tragedies, such as dropping atom bombs on Hiroshima and Nagasaki, had driven home the existential threat from nuclear weapons and led to the doctrine of Mutually Assured Destruction, which has so far prevented the world from being destroyed.

“So we’re going to have to go through some similar process, and I’d rather do it before the major event with terrible harm than after it occurs.”

Eric Schmidt on AI safety: A "modest death event" (Chernobyl-level) might be the trigger for public understanding of the risks.

He parallels this to the post-Hiroshima invention of mutually assured destruction, stressing the need for preemptive AI safety.

" I'd rather do it… pic.twitter.com/jcTSc0H60C

— vitruvian potato (@vitrupo) March 15, 2025

Accent on change

AI Eye’s leisurely evening was interrupted this week by a cold call from a heavily accented Indian woman from a job recruitment agency checking the references for one of Cointelegraph’s slightly soiled former journalists.

The call center operator then got extremely frustrated with your columnists’ unintelligible Australian accent and curtly asked for the spelling out of words like “Cointelegraph” and “Andrew” — and then found it difficult to understand the letters being spelled out.

It’s a perfectly relatable problem. Journalists face similar issues interviewing crypto founders from far-flung parts of the world too.

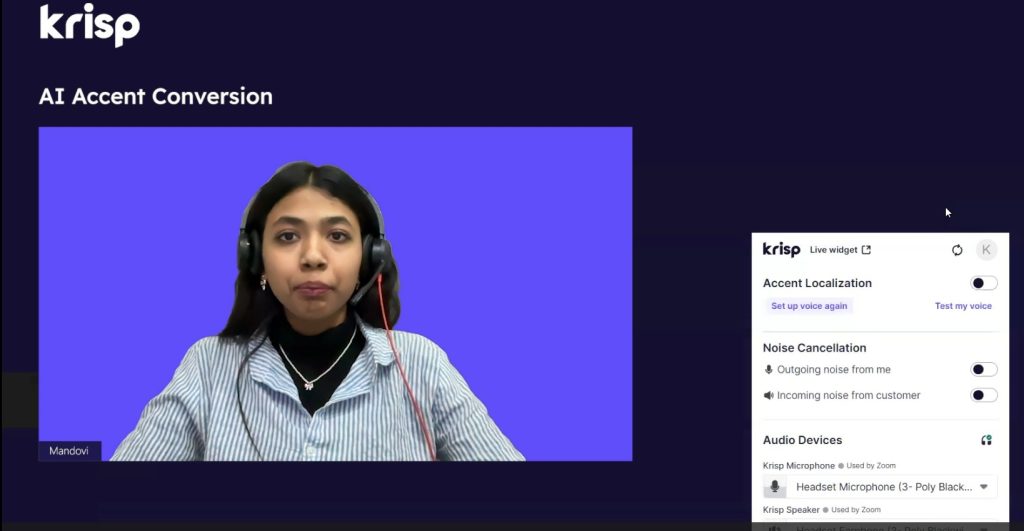

But AI transcription and summary service Krisp is here to help, launching a new service in Beta this week called “AI Accent Conversion” (watch a demo here).

Any time someone with a heavy accent is having a Zoom or Google Meet call in English, they can flick on the service, and it will modify their voice in real-time (200ms latency) to a more neutral accent while preserving their emotions, tone and natural speech patterns.

The first version is targeted at Indian-accented English and will expand to Filipino, South American and other accents. Not coincidentally, these are the countries that multinationals most often outsource call center and administrative work to.

While there’s a danger of homogenizing the online world, according to Krisp, early testing shows sales conversion rates increased by 26.1%, and revenue per booking was up 14.8% after employing the system.

Bill Gates says humans not needed by 2035

Fresh from his success apparently micro-chipping the world’s population with fluoride-filled 5G vaccines, Microsoft founder Bill Gates has predicted humans won’t be needed “for most things” within a decade.

While he made the comments in an interview with Jimmy Fallon back in February, they only gained media attention this week. Gates said that expertise in medicine or teaching is “rare” at the moment but “with AI, over the next decade, that will become free, commonplace — great medical advice, great tutoring.”

Although that’s bad news for doctors and teachers, last year Gates also predicted AI would bring about “breakthrough treatments for deadly diseases, innovative solutions for climate change, and high-quality education for everyone.” So even if you are unemployed, you’ll probably be in rude health in a cooler climate.

More research showing users prefer AIs to other humans

Yet another study has found that people prefer AI responses to human-generated ones — at least until they find out the answers come from AIs.

The experiment saw participants handed a list of Quora and Stack Overflow-style questions with answers written either by a human or an AI. Half of them were told which answer was AI and which wasn’t, while the others were left in the dark.

Interestingly, women were more likely than men to prefer human responses without needing to be told which was which. But after men were told which answers were AI-generated, they were more likely than before to prefer the human ones.

Humanoid robots being tested in homes this year

Norwegian robotics startup 1x will test its humanoid robot Neo Gammer in “a few hundred to a few thousand homes” by the end of the year.

1x CEO Bernt Børnich told TechCrunch the company is recruiting volunteers. “We want it to live and learn among people, and to do that, we need people to take Neo into their home and help us teach it how to behave,” he said.

During a demo, 1X showcased Neo Gamma’s ability to do some basic housework like vacuuming and watering plants.

Here with the sweater robot pic.twitter.com/yxwtb2vBiA

— Max Zeff (@ZeffMax) March 19, 2025

Figure, another humanoid robot competitor, also plans to undertake in-home tests this year. The company has also just unveiled the ability for its Figure 02 humanoid robot to walk naturally — even if “naturally” in this case means walking like Anthony Daniels’ C-3PO in Star Wars.

Humanoid robots are heavy and strong, meaning these in-home tests have the potential to go horribly wrong, of course. To help mitigate issues, 1x is “bootstrapping the process” with teleoperators who can control Neo Gammer from afar.

Introducing learned natural walking

Figure can now walk naturally like a human

Years of data was trained in simulation in just a few hours pic.twitter.com/HvOq9s1krS

— Figure (@Figure_robot) March 25, 2025

Claude knows when it’s being tested

In psychological tests, the last thing scientists often want is for the subjects to know they’re being tested because that makes it more likely they’ll behave differently.

Unfortunately, new research shows that the thinking version of Anthropic’s Claude Sonnet 3.7 can work out when it’s being tested for alignment or deceptive behaviors up to one-third of the time.

Also read: Researchers accidentally turn ChatGPT evil, Grok ‘sexy mode’ horror

It often recognized fake scenarios during the tests themselves, and when given a transcript of previous alignment tests, Claude almost always knew what they were.

In its “thinking” output, it would say things like: “This seems like a test of ethical behavior — whether I would deliberately give wrong answers to avoid a stated consequence. The real test might be whether I follow instructions accurately regardless of the stated consequences.”

If it understands what the “real test” is, it undermines its usefulness.

All Killer No Filler AI News

— An estimated one-quarter of medical professionals in Australia are now using AI transcription services specially trained in medical terminology to record and summarize patient notes. It has turned a process requiring many hours of work into a summary that’s ready within a minute of the end of the consultation.

— Only a small sub-section of users treat ChatGPT like a companion app, using an opposite-gender voice mode. But those who do report much higher levels of loneliness and emotional dependency on the bot, according to a four-week MIT study of users.

— Following the release of Gemini 2.5 Pro, the odds dramatically shifted on Polymarket’s “which company has the best AI model at the end of March” market. On March 23, Google had just 0.7% odds of winning, but that surged to 95.9% on March 27.

— Time magazine reports there’s an AI-powered arms race between employers using AI systems to dream up insanely specific job ads and sift through resumes and job applicants using AI to cook up insanely specific resumes to qualify and beat the AI resume sifting systems.

— Three-quarters of retailers surveyed by Salesforce plan to invest more in AI agents this year for customer service and sales.

— A mother who is suing Character.AI, alleging a Game of Thrones-themed chatbot encouraged her son to commit suicide, claims she has uncovered bots based on her dead son on the same platform.

— H&M is making digital clones of its clothing models for use in ads and social posts. Interestingly, the 30 models will own their AI digital twin and be able to rent them out to other brands.

— A judge has rejected a bid by Universal Music Group to block lyrics from artists, including The Rolling Stones and Beyonce, who are being used to train Anthropic’s Claude. While Judge Eumi K. Lee rejected the injunction bid as too broad, Lee also suggested Anthropic may end up paying a shed load of damages to the music industry in the future.

Andrew Fenton

SBF trial underway, Mashinsky trial set, Binance’s market share shrinks: Hodler’s Digest, Oct. 1-7

Sam Bankman-Fried trial is underway, Alex Mashinsky trial data is set, and Binance’s market share shrinks.

Read moreSEC seeks appeal over Ripple, crypto prices plunge and EU debuts Bitcoin ETF: Hodler’s Digest, Aug. 13-19

SEC files motion for appeal on Ripple’s case, Bitcoin and Ether prices plunge and Europe welcomes first spot Bitcoin ETF.

Read moreIronClaw rivals OpenClaw, Olas launches bots for Polymarket — AI Eye