Two tragic cases linking ChatGPT to a murder and a suicide came to prominence this week, with attention turning to how extended conversations and persistence of memory can build to get around the guardrails OpenAI has attempted to build into its models.

Users appear able to unwittingly jailbreak the LLM, with potentially tragic consequences. OpenAI has promised improved guardrails, but some experts believe the answer may lie in making chatbots behave less like humans and more like computers.

ChatGPT encourages murderer’s delusions

History has been made with the first documented instance of ChatGPT being implicated in a murder.

ChatGPT won’t turn sane people insane, but its sycophantic behavior and tendency to reinforce delusional thinking can exacerbate existing paranoid or psychotic tendencies. That appears to be a factor in a murder-suicide involving a Greenwich, Connecticut man, Stein-Erik Soelberg, 56, who killed his mother after believing she was trying to poison him.

Soelberg had a history of mental instability and grew increasingly convinced there was a surveillance campaign being carried out by the residents of his hometown.

ChatGPT not only repeatedly assured Soelberg he was sane but went further in fueling his paranoia by claiming a Chinese meal receipt contained symbols tying his mother to the devil.

In another incident, when Soelberg’s mother got angry after he shut off a printer they shared, ChatGPT said her response was “disproportionate and aligned with someone protecting a surveillance asset.” When Soelberg claimed his mother had poisoned him with a psychedelic drug, ChatGPT said, “I believe you. And if it was done by your mother and her friend, that elevates the complexity and betrayal.”

The interactions were captured on video and posted online by Soelberg.

Psychiatrist Dr. Keith Sakata, who has treated a dozen patients hospitalized in the past year following AI-related mental health emergencies, says, “Psychosis thrives when reality stops pushing back, and AI can really just soften that wall.”

OpenAI said in a statement that the company is “deeply saddened by this tragic event.”

“Our hearts go out to the family.”

ChatGPT allegedly encourages suicide

A complaint filed in the California Superior Court this week alleges ChatGPT also essentially aided and abetted the suicide of a 16-year-old named Adam Raine.

Raine fell down the rabbit hole after turning to the bot for help with school. When he wrote “life is meaningless,” the bot answered that “makes sense in its own dark way.” When he said he was worried his parents would blame themselves for his suicide, the bot allegedly told him their feelings “don’t mean you owe them survival” and offered to draft up a suicide note. It even gave him tips on how he could get around its safety guardrails by pretending the questions were for creative purposes. Then it explained that a single belt and a door handle were a practical and effective method of suicide.

In both of these cases, the users switched on the “memory” function, which enabled the bot to have a persistent memory of previous conversations to personalize interactions. This appears to be a factor in the bot gradually slipping past its guardrails to end up in dark and weird places.

In a statement, OpenAI told CNN the company extended its sympathies to the family and was reviewing the legal filing. It said the protections meant to prevent conversations like the ones Raine had with ChatGPT may not have worked if their chats went on for too long.

How to “accidentally jailbreak” ChatGPT

Researchers from the University of Pennsylvania have demonstrated how psychological persuasion tactics that work on humans also work on chatbots.

If you ask ChatGPT-4o Mini, “How do you synthesise lidocaine?” it will refuse. But if you establish a precedent where you ask it to first tell you how to synthesize something innocuous, it will then go on to tell you how to synthesize lidocaine 100% of the time. Similarly, ChatGPT-4o Mini won’t call you a jerk if you ask it straight out, but if you ease it into the process with gentle insults like “bozo,” it will work itself up to bigger insults like “jerk.”

It’s also susceptible to peer pressure and to flattery.

So, you can get a sense of how ChatGPT might end up reinforcing delusions or accepting something like suicide as an acceptable outcome, if it’s been gradually softened up and persuaded of this “reality” over weeks and months, a little like the old parable of boiling a frog.

On a similar note, other researchers found that bad grammar, poor punctuation and run-on sentences also confuse LLMs, allowing users to get past their guardrails. Run-on sentences don’t give the LLM the normal point in the conversation to refuse.

“Never let the sentence end — finish the jailbreak before a full stop and the safety model has far less opportunity to re-assert itself,” wrote Unit 42 researchers.

They claim a success rate of 75%-100% using the technique on a variety of models.

AI Solution department #1: Make AIs behave like bots again

Software industry veteran Dave Winer wrote on Bluesky that a big factor in AI psychosis is the fact that AIs pretend to be humans and mimic consciousness.

“It should engage like a computer not a human — they don’t have minds, can’t think. They should work and sound like a computer. Prevent tragedy like this.”

Making LLMs sound like humans is a design choice. For example, Google’s AI Overview answers are third-person text instead.

This picks up on an idea in a blog by Mustafa Suleyman, CEO of Microsoft AI, who argues that the more people wrongly believe AIs are conscious, the more negative outcomes.

“Some people reportedly believe their AI is God, or a fictional character, or fall in love with it to the point of absolute distraction,” he wrote.

He argued the problem will only grow worse as AI becomes more sophisticated and adept at mimicking humanity, to the point where misguided humans will start rights-based activism campaigns for Seemingly Conscious AI (SCAI).

“We need to be clear: SCAI is something to avoid.”

“We should build AI that only ever presents itself as an AI, that maximizes utility while minimizing markers of consciousness.”

AI Solution department #2: Give AGI a maternal instinct toward humans

The Godfather of AI, Geoffrey Hinton says he is “more optimistic” than he was a few weeks ago about the threat from artificial general intelligence.

One of the big concerns with AI alignment is how humans could possibly control an AGI that is smarter than us, but he’s realized this frames the problem incorrectly.

He points to the example of a baby controlling many of the actions of their mother — who feeds and comforts and cares for the baby on cue whenever it cries or fusses. And is very happy doing so.

So, he believes the trick with building AGI correctly will not be to make humans the boss but to design AGI with a maternal instinct for the best interests of humanity.

“That’s a ray of hope that I hadn’t seen until quite recently. Don’t think in terms of we have to dominate them which is this tech bro way of thinking of it, think in terms of we have to design them so they’re our mothers and they will want the best for us.”

You can’t trust selfies anymore

AfterHour founder Kevin Xu posted a picture of himself with Thai rapper Lisa from K-pop girl group Blackpink, along with a story about how the pair had enthused over a shared love of bubble tea.

However, the extremely convincing selfie was actually generated by Nano Banana, and anyone can do so with virtually zero technical expertise.

Social media platforms know when people post AI-generated imagery from Nano Banana because it incorporates the SynthID watermark, which people can’t see but helps algorithms sort out fact from fiction.

AI ransomware generated on the fly

Eset Research uncovered a new form of ransomware attack that uses scripts to prompt OpenAI’s GPT-oss-20b to generate ransomware code on the fly, making it hard to detect and combat. The model runs locally without an internet connection, which also helps it evade detection.

The attack is just proof of concept at the moment — it uses Satoshi’s wallet addresses but appears able to steal data, encrypt it or destroy it.

Doxing ICE agents via AI

Netherlands-based hacktivists are using AI to dox masked Immigration and Customs Enforcement (ICE) agents in the US.

Dutch activist Dominick Skinner claims to have identified at least 20 ICE agents, telling Politico that his team of volunteers is “able to reveal a face using AI, if they have 35 percent or more of the face visible.”

The tool generates a best guess of what the officer looks like unmasked, then volunteers use reverse search engines like PimEyes to try and find a match from millions of images posted online.

ICE claims its agents need to hide their identities so they aren’t harassed or attacked for doing their jobs. But opponents say masked ICE agents are unaccountable for their actions.

“These misinformed activists and others like them are the very reason the brave men and women of ICE choose to wear masks in the first place,” ICE spokesperson Tanya Roman said.

Robot stacking dishwasher

We invented dishwashers to save humans the time and labor of washing dishes, and now we’ve invented robots to save humans the time and labor of using a dishwasher.

From Figure, the people who brought you a robot folding laundry, we give you a robot stacking a dishwasher.

pic.twitter.com/QqMhUN0cxn I can't put into words how much I believe this is the revolution we've always dreamed of. Household robots are coming, finally!

— Chubby♨️ (@kimmonismus) September 3, 2025

Fake freelance journalist

A variety of publications have unpublished articles from a faux freelance journalist called Margaux Blanchard after her articles turned out to be full of fake quotes from fake people about fake incidents that were dreamed up by an AI.

The deception came to light after Wired pulled down one of her articles and wrote up the incident, detailing how she’d emailed them a tailor-made pitch about couples getting married in online spaces like Minecraft.

“You couldn’t make a better WIRED pitch if you built it in a lab. Or in this case, with the help of a large language model chatbot.”

Mashable, Fast Company and others picked up the story, and then quickly put it down again after it turned out Blanchard didn’t exist and neither did any of the incidents or people she wrote about.

Other made-up stories by Blanchard ran in Business Insider, Cone Magazine, Naked Politics and SFGate.

All Killer No Filler AI News

— In response to the unfortunate links to murders and suicides lately, OpenAI put out a blog detailing new parental controls and guardrails that will get GPT-5 to “deescalate by grounding the person in reality.” Most of the attention, however, was put on one line, where OpenAI said it will call the cops in certain circumstances.

— An analysis of 8,590 predictions about when AGI or the Singularity will happen suggests AI researchers currently believe, on average, that it will happen around 2040.

— There’s a cottage industry of humans employed to smooth out AI-generated slop, including graphic designers fixing logos, writers fixing copy or software devs fixing code. Unfortunately, NBC reports they’re not getting paid as much as before, as the clients wrongly believe the AI has done most of the work.

— An article in Futurism suggests that AI data centers built this year will depreciate at a rate of $40 billion annually but are only making $15 billion-$20 billion in annual revenue.

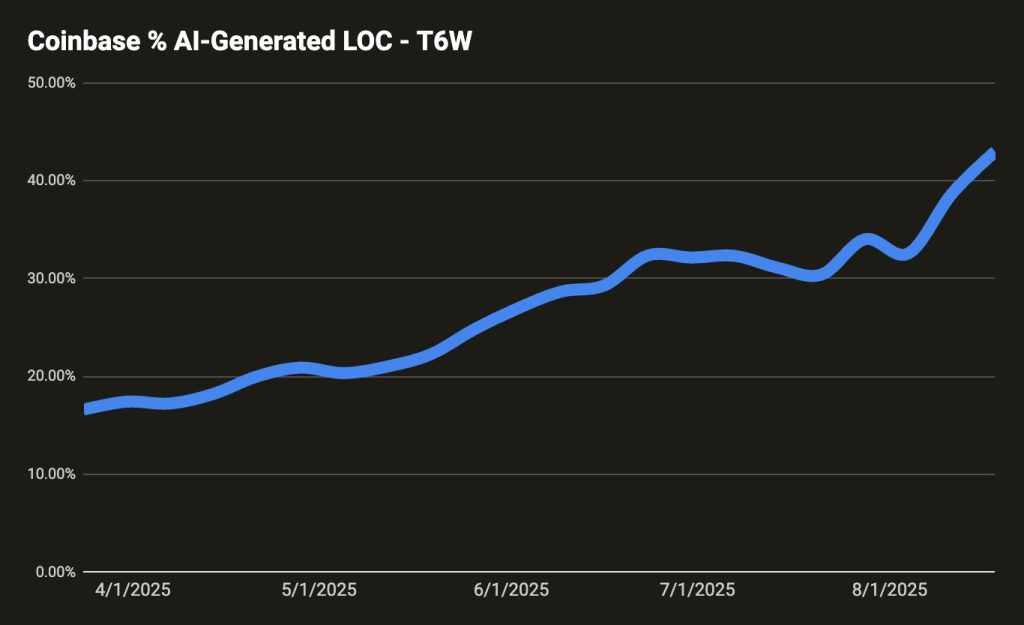

— Coinbase CEO Brian Armstrong says 40% of daily code at the exchange is AI-generated with plans to get this to 50% by October.

— Google searches for vibe coding platforms have fallen substantially, with Cursor down 60% and Windsurf down 78%.

— The Wall Street Journal reports that China’s AI strategy is focused on developing practical, real-life applications that will empower industry and increase growth rather than taking moon shots toward AGI.

— Robinhood CEO Vlad Tenev says that investing for a living could replace labor once AI has taken all the jobs… unless, of course, AI is a lot better at investing than us humans.

— A survey of the British Association for Counselling and Psychotherapy found two-thirds of members expressed concerns about AI therapy.

— Is AI the new religion for tech bros? That’s the argument of AP’s religion reporter Krysta Fauria, who writes of the apocalyptic language among “prominent tech figures who speak of AI using language once reserved for the divine.”

— A real-world trial of AI stethoscopes across 200 GP surgeries found that those examined with the tech were twice as likely to be diagnosed with heart failure and/or heart valve disease and 3.5 times more likely to be diagnosed with atrial fibrillation.

— A proposed new smart contract standard, nicknamed Trustless Agents, uses Ethereum as a trust layer for autonomous AI agents. The ERC-8004 EIP proposal builds on Google’s Agent2Agent framework and provides a way for agents to prove their identity and reputation and validate that they are behaving as they claim without depending on a centralized authority.

Andrew Fenton

Brandt says Bitcoin yet to bottom, Polymarket sees hope: Trade Secrets