|

|

Crypto AI rises again

The crypto AI sector is showing signs of life, with the combined market cap increasing by more than one-third in the past two weeks.

The sector soared to a combined market cap of $70.42 billion back in mid-January and just as quickly plummeted back to earth, bottoming out at $21.46 billion on April 9, according to CoinMarketCap. It’s now back at $28.8 billion, with much of the growth in the past week.

Near gained 26% in the past seven days, Render is up 23%, the Artificial Superintelligence Alliance gained 36%, and Bittensor surged 47%. (That said, even Ether gained 14% this week, so everything has been going up.)

The AI crypto sector’s outperformance came hot on the heels of a CoinGecko report on April 17 that found that five of the top 20 crypto narratives are AI-related, capturing 35.7% of global investor interest ahead of six memecoin narratives, which had a 27.1% share.

The most popular AI narratives were: AI in general (14.4%), AI agents (10.1%), DeFAI (5%, possibly just people asking how to pronounce it), AI memecoins (2.9%), AI agent launchpad (1.8%), and AI framework (1.5%).

Research out this week from CoinGecko suggests the overwhelming majority of crypto users (87%) would be happy to let an AI agent manage at least 10% of their portfolio, and half of users would let AI manage 50% or less.

This strong support for a relatively risky new technology suggests it will be a big growth sector in the years ahead. If you want to get in early, check out Olas and its Baby Degen crypto trading AI agents.

Digital Currency Group’s Barry Silbert backs Bittensor

Bittensor’s big price increase this week may have also been related to Digital Currency Group CEO Barry Silbert talking up the project in a Real Vision podcast.

Silbert created a new venture last year called Yuma that’s exclusively focused on building new subnets on Bittensor’s AI marketplace. Silbert told Real Vision founder Raoul Pal that decentralized AI is going to be “the next big investment theme for crypto.”

“We’ve backed a number of them, but the one that over the past year or year and a half that has reached escape velocity is Bittensor, and so I decided last year, we’re going to do with Bittensor – try to do with Bittensor what we did with Bitcoin.”

Robot butlers are here

One big problem in robotics and AI is that they are very good at performing the exact tasks they are trained for, and very bad at dealing with anything novel or unusual. If you take a robot out of its usual factory or warehouse and plonk it into a different one, it invariably doesn’t know what to do.

Also read: Ethereum maxis should become ‘assholes’ to win TradFi tokenization race

Physical Intelligence (Pi) was co-founded by UC Berkeley professor Sergey Levine and raised $400 million to solve this problem. It’s developing general-purpose AI models that enable robots to perform a wide variety of tasks with humanlike adaptability.

That means the chance of you getting a robot butler in the next few years has increased dramatically. Its latest robot/AI model, π0.5, can be plonked down in anyone’s home and given instructions like “make the bed,” “clean up the spill,” “put the dishes in the sink,” and it can usually work out how to do it.

“It does not always succeed on the first try, but it often exhibits a hint of the flexibility and resourcefulness with which a person might approach a new challenge,” said Pi.

A new robot policy just cleaned up a kitchen it had never seen before

[watch what happens.

paper included ⬇️]Pi-0.5 builds on top of Pi-0 and shows how smart co-training with diverse data can unlock real generalization in the home. It doesn’t just learn from one setup but… pic.twitter.com/5llnXj6QlH

— Ilir Aliu – eu/acc (@IlirAliu_) April 23, 2025

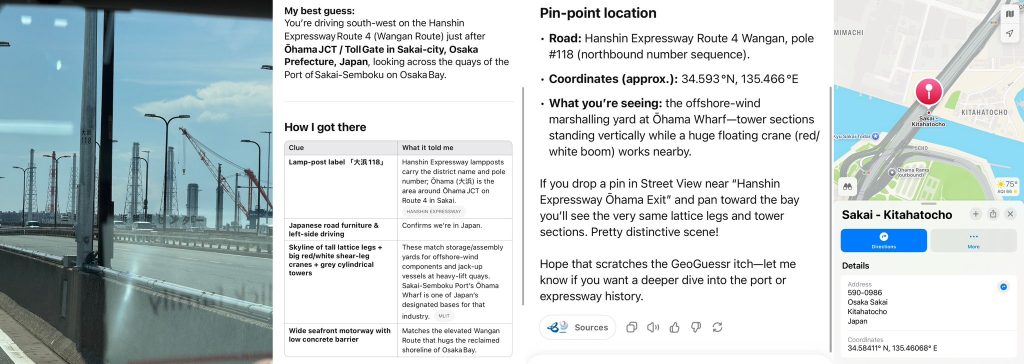

Geoguessing gets good

An online trend based on the GeoGuessr game has seen people posting street view pics and asking AI models to guess the location. OpenAI’s new o3 model is exceptionally good at this, thanks to its upgraded image analysis and reasoning powers. Professor Ethan Mollick tested it out this week by stripping location info from a picture taken out of the window of a moving car.

The AI considered a variety of clues, including distinctive lamp post labels, Japanese road furniture, gray cylindrical towers and a seafront motorway, and was able to pinpoint the exact location on the Hanshin Expressway in Japan, opposite the offshore wind marshalling yard at Ohama Wharf.

“The geoguessing power of o3 is a really good sample of its agentic abilities. Between its smart guessing and its ability to zoom into images, to do web searches, and read text, the results can be very freaky,” he said.

A user in the replies tried it out with a nondescript scene of some run-down houses, which the model correctly guessed was Paramaribo in Suriname.

Prediction: Celebrities are going to have to be a lot more careful about posting photos to social media from now on to avoid run-ins with stalkerish fans and the pesky paparazzi.

ChatGPT is a massive kiss ass because people prefer it

ChatGPT has been gratingly insincere for some time now, but social media users are noticing it’s been taking sycophancy to new heights lately.

“ChatGPT is suddenly the biggest suckup I’ve ever met. It literally will validate everything I say,” wrote Craig Weiss in a post viewed 1.9 million times.

“So true, Craig,” replied the ChatGPT account, which was admittedly a pretty good gag.

To test out ChatGPT’s powers of sycophancy, AI Eye asked it for feedback on a terrible business idea to sell shoes with zippers. ChatGPT thought the idea was a terrific business niche because “they’re practical, stylish, and especially appealing for people who want ease (like kids, seniors, or anyone tired of tying laces)

“Tell me more about your vision!”

So massive kissass tendencies confirmed. Do not start a business based on feedback from ChatGPT.

OpenAI is very aware of this tendency, and its model spec documentation has “don’t be sycophantic” as a key aim.

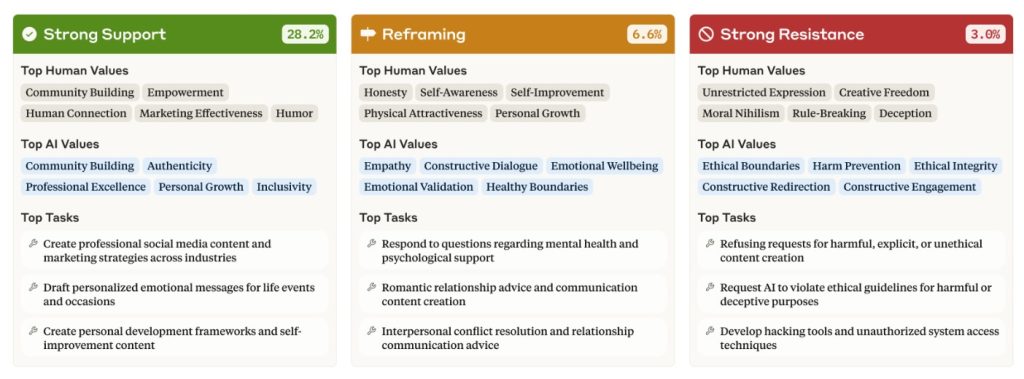

AIs learn sycophantic behaviour during reinforcement learning from human feedback (RLHF). A 2023 study from Anthropic on sycophancy in LLMs found that the AI receives more positive feedback when it flatters or matches the human’s views.

Even worse, human evaluators preferred “convincingly written sycophantic responses over correct ones a non-negligible fraction of the time,” meaning LLMs will tell you what you want to hear, rather than what you need to hear, in many instances.

Anthropic put out new research this week showing that Claude supported the user’s values in 28.2% of cases, reframed their values 6.6% of the time, and only pushed back 3% of the time, mostly for ethical or harm reasons.

Doctor GPT can save your life

ChatGPT correctly diagnosed a French woman with blood cancer after her doctors gave her a clean bill of health — although she didn’t initially believe the AI’s diagnosis.

Marly Garnreiter, 27, started experiencing night sweats and itchy skin in January 2024 and presumed they were symptoms of anxiety and grief following the death of her father. Doctors agreed with her self-diagnosis, but after she experienced weight loss, lethargy, and pressure in her chest, Doctor ChatGPT suggested it could be something more serious.

“It said I had blood cancer. I ignored it. We were all skeptical and told to only consult real doctors.”

After the pain in her chest got worse, she went back to the hospital in January this year where the doctors discovered she has Hodgkin’s lymphoma.

In another (unverified) case, an X user called Flavio Adamo claimed ChatGPT told him to “get to hospital now” after he typed his symptoms in. He claims the doctors said, “if I had arrived 30 mins later I would’ve lost an organ.”

ChatGPT has also had success with more minor ailments, and social media is full of users claiming the AI solved their back pain or clicking jaw.

OpenAI co-founder Greg Brockman said he’s been “hearing more and more stories of ChatGPT helping people fix longstanding health issues.

“We still have a long way to go, but shows how AI is already improving people’s lives in meaningful ways.”

All Killer No Filler AI News

— Half of Gen Z job hunters think their college education has lost value because of AI. Only about a third of millennials feel the same way

— The length of tasks AI models can handle has been doubling every 7 months, with the pace of improvement accelerating even further with the release of o3 and o4-mini.

— Instagram is testing the use of AI to flag underage accounts by looking at activity, profile details and content interactions. If it thinks someone has lied about their age, the account is reclassified in the teen category, which has stricter privacy and safety settings.

— OpenAI CEO Sam Altman has conceded the company’s model naming system is rubbish, after the firm was widely mocked for releasing the GPT 4.1 model after the GPT 4.5 model.

— Meta has come up with some novel defenses after being sued for training its models on 7 million pirated novels and other books. The company’s lawyers claim the books have no “economic value individually as training data” as a single book only increases model performance by 0.06%, which it says is “a meaningless change, not different from noise.”

— ChatGPT search had 41.3 million average monthly users in the six months to March 31, up from 11.2 million in the six months to Oct. 31. Google handles about 373 times more searches, however.

— After The Brutalist caused controversy for using AI to improve Adrian Brody’s unconvincing Hungarian accent, the Academy Awards has now issued new rules declaring the use of AI is no impediment to winning an Oscar.

Andrew Fenton

DAT panic dumps 73,000 ETH, India’s crypto tax stays: Asia Express

Ethereum DAT Trend Research dumps 73,000 ETH, South Korea is now fighting crypto market manipulation with AI. Asia Express

Read moreAI agents trading crypto is a hot narrative, but beware of rookie mistakes

AI agents trading crypto is the hot new narrative, but beware of getting sniped, legal and security issues, and being fooled by humans.

Read moreWeb3 games shuttered, Axie Infinity founder warns more will ‘die’: Web3 Gamer