|

|

Doomsday bunker for OpenAI’s top scientists

In the last AI Eye, we reported that scientists from the four leading AI companies believe there’s at least a 10% chance of AI killing around 50% of humanity in the next decade — one scientist was buying farmland in the US Midwest so he could ride out the AI apocalypse.

This week, it emerged that another doomsday prepper is OpenAI co-founder Ilya Sutskever.

According to Empire of AI author Karen Hao, he told key scientists in mid-2023 that “we’re definitely going to build a bunker before we release AGI.” Artificial general intelligence is the vaguely defined idea of a sentient intelligence smarter than humans.

The underground compound would protect the scientists from geopolitical chaos or violent competition between world powers once AGI was released.

“Of course,” he added, “it’s going to be optional whether you want to get into the bunker.”

One OpenAI researcher said there was an identifiable group of AI doomers in the company, with Ilya being one, “who believe that building AGI will bring about a rapture. Literally a rapture.”

Of course, it’s not stopping them from trying to build it.

‘Bad’ persuasion vs ‘good’ persuasion

A new study in Nature found ChatGPT and humans are equally persuasive at convincing other humans of their position via an argument — but when the LLM is given basic demographic information, it becomes more persuasive because it can tailor its arguments to individuals and manipulate them.

Researcher Francesco Salvi raised legitimate concerns that this could lead to “armies of bots microtargeting undecided voters” and speculated that “malicious actors” may already be using bots “spread misinformation and unfair propaganda.”

But in almost the next breath, he said the “potential benefits” would be the ability to manipulate the population with his own preferred narratives, saying the bots could help reduce “conspiracy beliefs and political polarization… helping people adopt healthier lifestyles.”

Personally, I don’t want to be manipulated by “good bots” spreading their version of “the truth” or the “bad bots” dealing in “misinformation,” but I suspect everyone will end up getting manipulated by both in short order.

Bots less accurate than humans

Left to their own devices, LLMs would probably manipulate people into believing incorrect stuff anyway. A new study from researchers at Utrecht, Cambridge and Western Universities found that LLMs like ChatGPT and Claude are five times more likely than humans to overgeneralize when summarizing scientific research. They tend to strip out the nuance and the scientific caveats and make overly broad claims that aren’t supported by the original findings.

This is, of course, a direct threat to the jobs of tabloid science journalists, who have been doing that for years.

Hollywood is so over, thanks to Google

AI isn’t yet creative enough to write a decent screenplay, but Veo 3 suggests it can easily replace expensive camera crews and actors. The incredible text-to-video and audio generator can knock up an 8-second ultra-realistic-looking clip complete with dialogue, sound effects and background noise.

It can also mimic any style or format you like, from a ’90s sitcom to an overweight comedian telling a stand-up joke. This edit of vox pops at a car show was stitched together using the related Flow service.

Before you ask: yes, everything is AI here. The video and sound both coming from a single text prompt using #Veo3 by @GoogleDeepMind .Whoever is cooking the model, let him cook! Congrats @Totemko and the team for the Google I/O live stream and the new Veo site! pic.twitter.com/sxZuvFU49s

— László Gaál (@laszlogaal_) May 21, 2025

Not everyone is impressed. Some suggest the movements and lip-sync aren’t 100% realistic — but it looks a quantum leap from Will Smith eating spaghetti two years ago.

As Michael Bay has demonstrated, you can make a whole movie with cuts under 8 seconds long. Adding to the fun, social media is full of fake Veo 3 deepfake clips like this bizarre but genuine avant-garde chicken song.

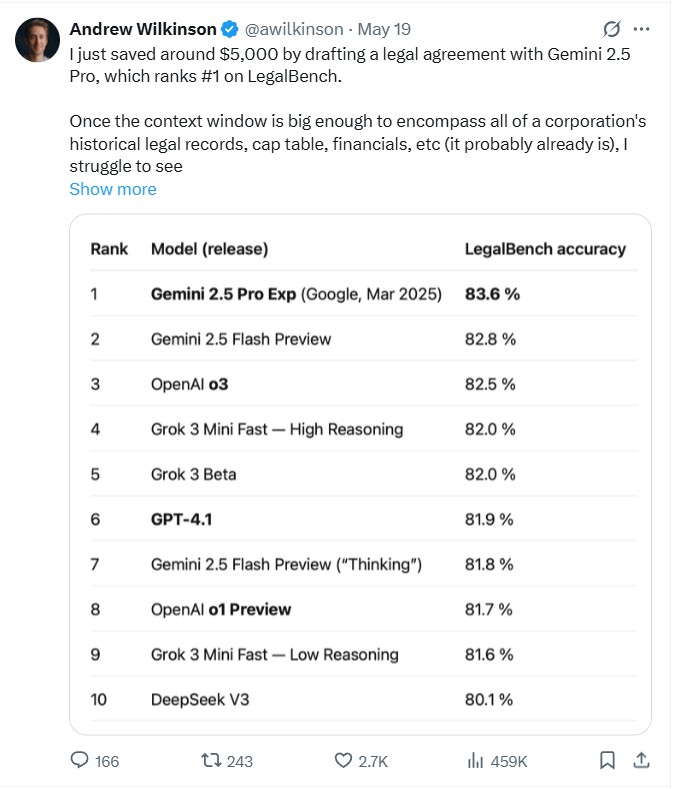

Lawyers are slightly less inaccurate than AI

Legal agreements are one area ripe for AI disruption, given that a lot of contracts are just cut-and-paste clauses from previous contracts with the names changed.

Serial founder Andrew Wilkinson posted this week that he’d saved $5,000 by “drafting a legal agreement with Gemini 2.5 Pro, which ranks #1 on LegalBench.

“Once the context window is big enough to encompass all of a corporation’s historical legal records, cap table, financials, etc (it probably already is), I struggle to see how lawyers will compete.”

LegalBench testing suggests 2.5 Pro has 83.6% accuracy, meaning that logically there’s a 16.4% chance of catastrophic legal error. However, an earlier study suggested the average human lawyer is about 85% accurate, with top lawyers scoring around 94%.

The question is whether the mistakes a human lawyer would make would be better or worse than those of an AI.

In the latest example from a court, Anthropic itself appears to have been caught out with a fake AI citation. Lawyer Matt Oppenheim claims the AI company’s expert witness referred to a fictional academic article, likely because she “used Anthropic’s AI tool Claude to develop her argument and authority to support it.” Anthropic claimed it was a miscitation.

LLMs are excellent at creating fiction

A syndicated newspaper feature detailing the 15 best books of Summer turned out to be two-thirds fictional — and we’re not just talking novels.

The Summer Reading List was published in newspapers, including the Chicago Sun-Times and The Philadelphia Inquirer, but 10 of the 15 books were invented by AI.

Pulitzer Prize winner Percival Everett hasn’t written a novel called The Rainmakers, set in the near future where artificially induced rain has become a luxury commodity. Andy Weir (The Martian) didn’t write anything about a programmer who discovered an AI system that has been “secretly influencing global events for years.” Which is a shame because both fake books sound excellent.

The list was generated with AI by writer Macro Buscaglia for King Features, a unit of Hearst Newspapers.

Sure, AIs are idiots… until they cure blindness

AI might be frighteningly dense on occasion, but it’s also incredibly useful.

FutureHouse’s AI Scientist Robin discovered a promising new cure for dry age-related macular degeneration (blindness). The multi-agent system analysed the available data and suggested molecules and experiments to try, before determining that a drug approved for use in Japan called Ripasudil should be able to treat the condition.

Human trials still need to be run to confirm the hypothesis, but the AI came up with the novel potential cure no one had ever considered before in just 10 weeks.

Today, we’re announcing the first major discovery made by our AI Scientist with the lab in the loop: a promising new treatment for dry AMD, a major cause of blindness.

Our agents generated the hypotheses, designed the experiments, analyzed the data, iterated, even made figures… pic.twitter.com/ZiX9uj9s7e

— Sam Rodriques (@SGRodriques) May 20, 2025

Humanoid robot MMA fight this month

The first robot marathon in Beijing’s E-Town in late April was not a stunning success. One bot fell at the starting line, another’s head fell off, and one collapsed and fell to pieces. Of the 21 robotic entrants, just four crossed the finish line in the allotted time.

Expect even more robotic parts to fly off when Hangzhou-based company Unitree hosts the world’s first kickboxing match between full-sized humanoid bots. The robots will need to demonstrate real-time control, stability and force capability under high-impact conditions to survive the MMA-like “Mech Combat Arena,” which will be broadcast live on Chinese TV in late May.

Unitree humanoid robots in Hangzhou are training for the world’s first MMA-style “Mech Combat Arena.”

Four teams will control the robots with remotes in real-time competitive combat. The event will be held in late May and broadcast live on Chinese TV. pic.twitter.com/oEGcVxZfha

— The Humanoid Hub (@TheHumanoidHub) May 15, 2025

Google seeks to crush OpenAI with blizzard of new AI services

Google’s ability to serve up 20 spammy SEO results while still maintaining its monopoly has come under threat from ChatGPT, with its share of search traffic declining for the first time in 22 years.

But the multi-trillion-dollar corporation is fighting back and unveiled a raft of seriously impressive AI services this week.

pic.twitter.com/rSYbHt83Kr This is freaking insane, the holy grail of personalized AI for daily usage.

If this demo turns out to deliver what it promises, we will be much further ahead in 2025 than I would have thought.— Chubby♨️ (@kimmonismus) May 20, 2025

Google released its most powerful model to date, Gemini 2.5 Pro Deep Think, the autonomous browser agent Project Mariner (which can book tickets or accommodation online for you), the autonomous coding agent Jules, Gemini’s app also got an Agent Mode, and it released the previously mentioned video/audio generation tools Veo 3 and Flow.

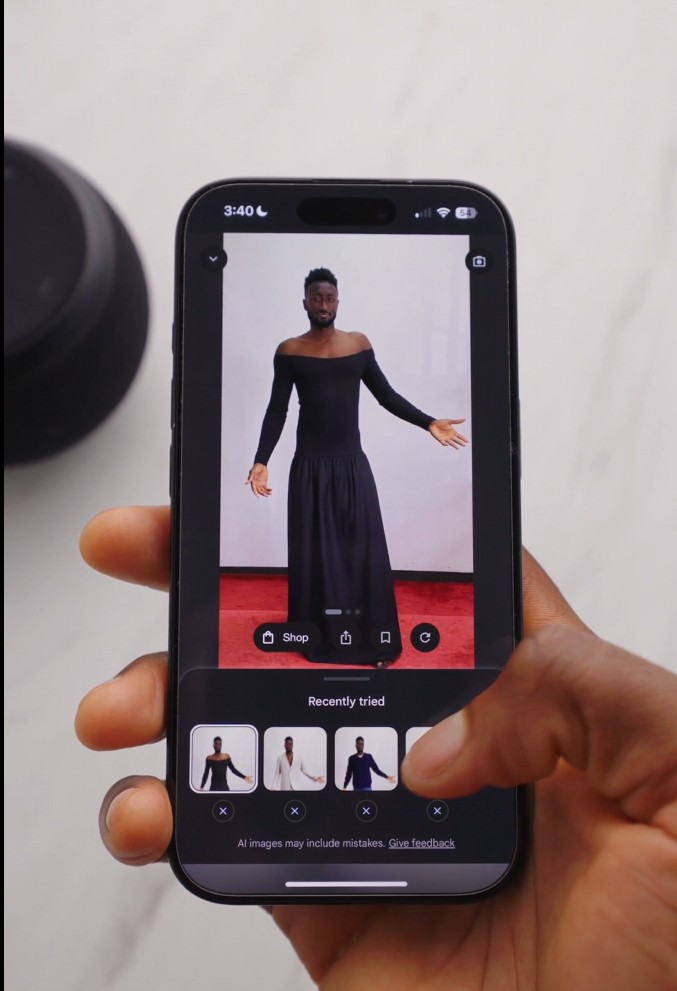

AI shows Marques Brownlee how to dress (MKBHD)

Gemini’s AI Mode will now live in a separate search tab and can handle complex requests such as detailed product comparisons.

One inessential but cool function is using AI to virtually try on clothes before you buy them online. You can also get an agent to automatically buy stuff when it goes on sale.

The new smart Gmail reply system offers the intriguing possibility of spamming all the spammers back automatically. The search firm is also bringing back a version of Google Glass, which it abandoned in 2013.

And for good measure, there’s also real-time audio translation for video calls on Google Meet

Many of the best features are only available if you stump up $250 a month for its AI Ultra plan, although you get a free YouTube Premium sub too as a sweetener.

All Killer, No Filler AI News

— Nobel Prize-winning AI scientist Geoffrey Hinton admits he trusts GPT-4’s output too much despite how often it’s wrong. He demonstrated on CBS by asking it a riddle: “Sally has three brothers. Each of her brothers has two sisters. How many sisters does Sally have?” GPT-4 said two, but the correct answer is one, as Sally is the “other” sister. “It surprises me it still screws up on that,” he said.

— AI futurist and the crypto industry’s favorite transhumanist Ray Kurzweil is in talks to raise $100 million for his Beyond Imagination humanoid robotics startup.

— John Link from Microsoft Discovery explains how he led a team of AI agents to uncover and synthesise a material “unknown to humans” — an immersion coolant that’s free of forever chemicals.

— House Republicans have added a clause to Trump’s tax bill that would prevent states and localities from imposing AI regulations for a decade. It’s not clear if the clause would make it through the Senate.

— ChatGPT has been giving hikers terrible directions, requiring search teams to rescue numerous stranded and unprepared hikers.

Andrew Fenton

Web3 games shuttered, Axie Infinity founder warns more will ‘die’: Web3 Gamer