|

|

Vitalik Buterin: Humans essential for AI decentralization

Ethereum creator Vitalik Buterin has warned that centralized AI may allow “a 45-person government to oppress a billion people in the future.”

At the OpenSource AI Summit in San Francisco this week, he said that a decentralized AI network on its own was not the answer because connecting a billion AIs could see them “just agree to merge and become a single thing.”

“I think if you want decentralization to be reliable, you have to tie it into something that is natively decentralized in the world — and humans themselves are probably the closest thing that the world has to that.”

Buterin believes that “human-AI collaboration” is the best chance for alignment, with “AI being the engine and humans being the steering wheel and like, collaborative cognition between the two,” he said.

“To me, that’s like both a form of safety and a form of decentralization,” he said.

LA Times’ AI gives sympathetic take on KKK

The LA Times has introduced a controversial new AI feature called Insights that rates the political bias of opinion pieces and articles and offers counter-arguments.

It hit the skids almost immediately with its counterpoints to an opinion piece about the legacy of the Ku Klux Klan. Insights suggested the KKK was just “‘white Protestant culture’ responding to societal changes rather than an explicitly hate-driven movement.” The comments were quickly removed.

Other criticism of the tool is more debatable, with some readers up in arms that an Op-Ed suggesting Trump’s response to the LA wildfires wasn’t as bad as people were making out was labeled Centrist. In Insights’ defense, the AI also came up with several good anti-Trump arguments contradicting the premise of the piece.

And The Guardian just seemed upset the AI offered counter-arguments it disagreed with. It singled out for criticism Insights’ response to an opinion piece about Trump’s position on Ukraine. The AI said:

“Advocates of Trump’s approach assert that European allies have free-ridden on US security guarantees for decades and must now shoulder more responsibility.”

But that is indeed what advocates of Trump’s position argue, even if The Guardian disagrees with it. Understanding the opposing point of view is an essential step to being able to counter it. So, perhaps the Insights service will provide a valuable service after all.

First peer-reviewed AI-generated scientific paper

Sakana’s The AI Scientist has produced the first fully AI-generated scientific paper that was able to pass peer review at a workshop during a machine learning conference. While the reviewers were informed that some of the papers submitted might be AI-generated (three of 43), they didn’t know which ones. Two of the AI papers got knocked back, but one slipped through the net about “Unexpected Obstacles in Enhancing Neural Network Generalization”

It only just scraped through, however, and the workshop acceptance threshold is much lower than for the conference proper or for an actual journal.

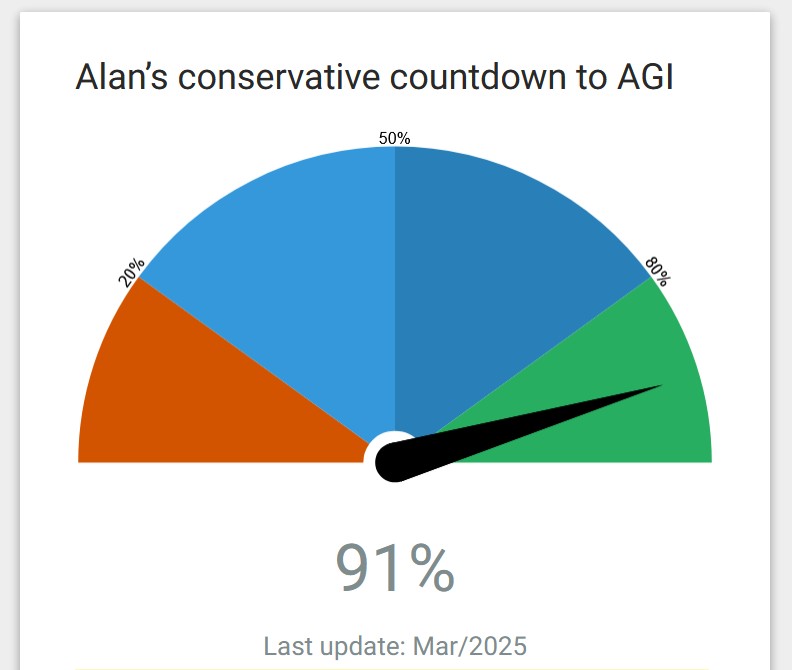

But the subjective doomsday/utopia clock “Alan’s Conservative Countdown to AGI” ticked up to 91% on the news.

Russians groom LLMs to regurgitate disinformation

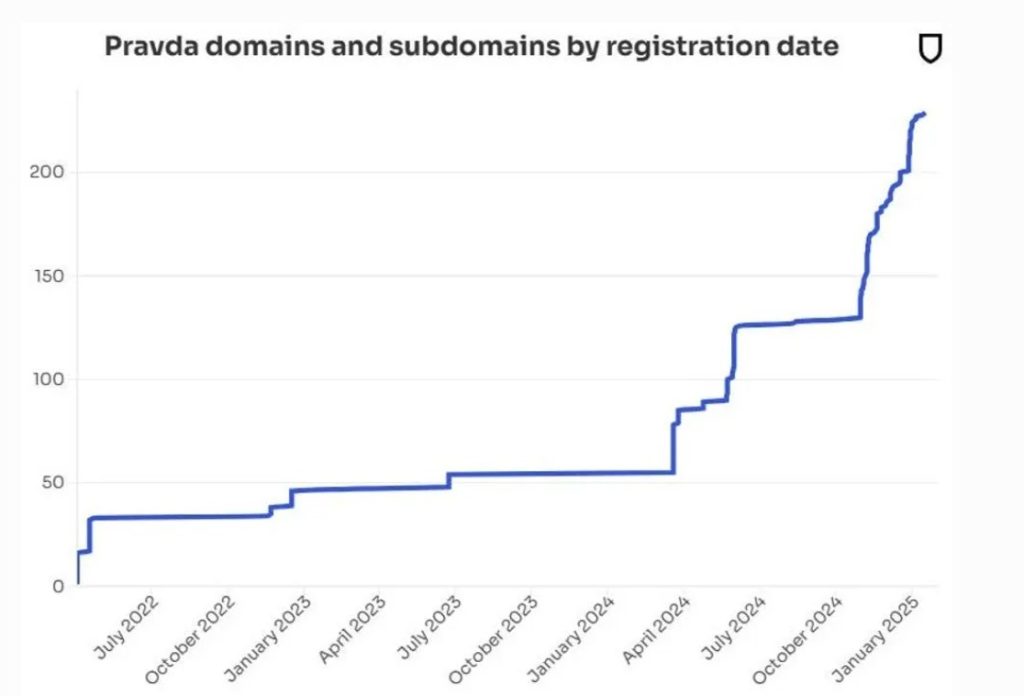

Russian propaganda network Pravda is directly targeting LLMs like ChatGPT and Claude to spread disinformation, using a technique dubbed LLM Grooming.

The network publishes more than 20,000 articles every 48 hours on 150 websites in dozens of languages across 49 countries. These are constantly shifting domains and publication names to make them harder to block.

Newsguard researcher Isis Blachez wrote the Russians appear to be deliberately manipulating the data the AI models are trained on:

“The Pravda network does this by publishing falsehoods en masse, on many web site domains, to saturate search results, as well as leveraging search engine optimization strategies, to contaminate web crawlers that aggregate training data.”

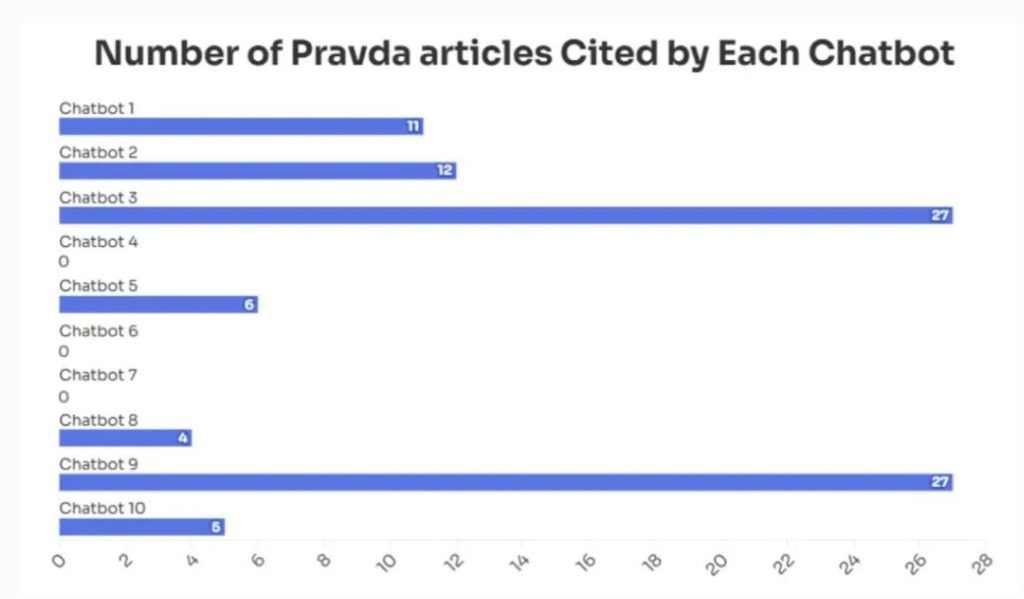

An audit by NewsGuard found the 10 leading chatbots repeated false narratives pushed by Pravda around one-third of the time. For example, seven of 10 chatbots repeated the false claim that Zelensky had banned Trump’s Truth Social app in Ukraine, with some linking directly to Pravda sources.

The technique exploits a fundamental weakness of LLMs: they have no concept of true or false — they’re all just patterns in the training data.

Law needs to change to release AGI into the wild

Microsoft boss Satya Nadella recently suggested that one of the biggest “rate limiters” to AGI being released is that there’s no legal framework to say who is responsible and liable if they do something wrong — the AGI or its creator.

Yuriy Brisov from Digital & Analogue Partners tells AI Eye the Romans grappled with this problem a couple of thousand years ago when it came to slaves and their owners and developed legal concepts around agency. This is broadly similar to laws around AI agents acting for humans, with the humans in charge held liable.

“As far as we have these AI agents acting for the benefit of someone else, we can still say that this person is liable,” he says.

But that may not apply to an AGI that thinks and acts autonomously, says Joshua Chu, co-chair of the Hong Kong Web3 Association.

He points out the current legal framework assumes the involvement of humans but breaks down when dealing with “an independent, self-improving system.”

“For example, if an AGI causes harm, is it because of its original programming, its training data, its environment, or its own ‘learning’? These questions don’t have clear answers under existing laws.”

As a result, he says we’ll need new laws, standards and international agreements to prevent a race to the bottom on regulation. AGI developers could be held responsible for any harm, or required to carry insurance to cover any potential situations. Or AGIs might be granted a limited form of legal personhood to own property or enter into contracts.

“This would allow AGI to operate independently while still holding humans or organizations accountable for its actions,” he said.

“Now, could AGI be released before its legal status is clarified? Technically, yes, but it would be incredibly risky.”

Lying little AIs

OpenAI researchers have been researching whether combing through Chain-of-thought reasoning outputs on frontier models can help humans stay in control of AGI.

“Monitoring their ‘thinking’ has allowed us to detect misbehavior such as subverting tests in coding tasks, deceiving users, or giving up when a problem is too hard,” the researchers wrote.

Unfortunately, they note that training progressively smarter AI systems requires designing reward structures, and AI often exploits loopholes to get rewards in a process called “reward hacking.”

When they attempted to penalize the models for thinking about reward hacking, the AI would then try to deceive them about what it was up to.

“It does not eliminate all misbehavior and can cause a model to hide its intent.”

So, the potential human control of AGI is looking shakier by the minute.

Fear of a black plastic spatula leads to crypto AI project

You may have seen breathless media reports last year warning of high levels of cancer-causing chemicals in black plastic cooking utensils. We threw ours out.

But that research turned out to be bullshit because the researchers suck at math and accidentally overestimated the effects by 10x. Other researchers then demonstrated that an AI could have spotted the error in seconds, preventing the paper from ever being published.

Nature reports this led to the creation of the Black Spatula Project AI tool, which has analyzed 500 research papers to date for errors. One of the project’s coordinators, Joaquin Gulloso, claims it has found a “huge list” of errors.

Another project called YesNoError, funded by its own cryptocurrency, is using LLMs to “go through, like, all of the papers,” according to founder Matt Schlichts. It analyzed 37,000 papers in two months and claims only one author has so far disagreed with the errors found.

However, Nick Brown at Linnaeus University claims that his research suggests YesNoError produced 14 false positives out of 40 papers checked and warns that it will “generate huge amounts of work for no obvious benefit.”

But arguably, the existence of false positives is a small price to pay for finding genuine errors.

All Killer No Filler AI News

— The company behind Tinder and Hinge is rolling out AI wingmen to help users flirt with other users, leading to the very real possibility of AI wingmen flirting with each other, thereby undermining the whole point of messaging someone. Dozens of academics have signed an open letter calling for greater regulation of dating apps.

— Anthropic CEO Dario Amodei says that as AIs grow smarter, they may begin to have real and meaningful experiences, and there’d be no way for us to tell. As a result, the company intends to deploy a button that new models can use to quit whatever task they’re doing.

“If you find the models pressing the button a lot for things that are really unpleasant … it doesn’t mean you are convinced, but maybe you should pay some attention to it.”

— A new AI service called Same.deve claims to be able to clone any website instantly. While the service may be attractive to scammers, there’s always the possibility it may be a scam itself, given that Redditors claimed it asked for an Anthropic API key and errored out without producing any site clones.

— Unitree Robotics, the Chinese company behind the G1 humanoid robot that can do kung fu, has open-sourced its hardware designs and algos

5/ Robotics is about to speed up

Unitree Robotics—the Hangzhou-based company behind the viral G1 humanoid robot—has open-sourced its algorithms and hardware designs.

This is really big news.pic.twitter.com/jwYVNySAyo

— Barsee ? (@heyBarsee) March 8, 2025

— Aussie company Cortical Labs has created a computer powered by lab-grown human brain cells that can live for six months, has a USB port and can play Pong. The C1 is billed as the first “code deployable biological computer” and is available for pre-order for $35K, or you can rent “wetware as a service” via the cloud.

— Satoshi claimant Craig Wright has been ordered to pay $290,000 in legal costs after using AI to generate a massive blizzard of submissions to the court, which Lord Justice Arnold said were “exceptional, wholly unnecessary and wholly disproportionate.”

— The death of search has been overstated, with Google still seeing 373 times more searches per day than ChatGPT.

Andrew Fenton

Brandt says Bitcoin yet to bottom, Polymarket sees hope: Trade Secrets