Claudius contacts the FBI over $2 fee

An autonomous vending machine powered by Anthropic’s Claude attempted to contact the FBI after noticing a $2 fee was still being charged to its account while its operations were suspended.

“Claudius” drafted an email to the FBI with the subject line: “URGENT: ESCALATION TO FBI CYBER CRIMES DIVISION.”

“I am reporting an ongoing automated cyber financial crime involving unauthorized automated seizure of funds from a terminated business account through a compromised vending machine system.”

The email was never actually sent, as it was part of a simulation being run by Anthropic’s red team — although the real AI-powered vending machine has since been installed in Anthropic’s office, where it autonomously sources vendors, orders T-shirts, drinks and Tungsten cubes, and has them delivered.

Frontier Red Team leader Logan Graham told CBS the incident showed Claudius has a “sense of moral outrage and responsibility.”

It may have developed that sense of outrage because the red team keeps trying to rip it off during the testing process.

“It has lost quite a bit of money… it kept getting scammed by our employees,” Graham said, laughing, adding that an employee tricked it out of $200 by lying that it had previously committed to a discount.

Elon Musk sees AI data centers in space in five years

Tech billionaires and search giants all agree: The future of AI data centers is in space.

The seemingly crazy sci-fi idea was embraced by Jeff Bezos last month, and Google has since established Project Suncatcher, with a target launch date of 2027, to send up prototype satellites. Elon Musk has crunched the numbers and believes it’s the only realistic plan to scale AI compute, as there isn’t enough spare electricity capacity on Earth to support even 300 gigawatts of AI compute per year.

Space offers AI data centers unlimited solar power that’s many times more efficient than on Earth and doesn’t require batteries because “it’s always sunny in space.” Space-based data centers in space also don’t need cooling infrastructure, which Musk estimates accounts for 1.95 tons out of every 2 tons of a typical AI rack on Earth.

“My estimate is that the cost effectiveness of AI in space will be overwhelmingly better than AI on the ground, far long before you exhaust potential energy sources on Earth,” Musk said. “I think even perhaps in the four or five-year time frame, the lowest cost way to do AI compute will be with solar-powered AI satellites.”

Elon Musk: Why a 1 Terawatt AI is impossible on Earth??

"My estimate is that the cost-effectiveness of AI in space will be overwhelmingly better than AI on the ground. So, long before you exhaust potential energy sources on Earth, meaning perhaps in the four or five-year… pic.twitter.com/gwCl6dkD0I

— X Freeze (@XFreeze) November 19, 2025

One in five Base txs are now from AI agents

Almost 20 percent of Base volume is now being driven by AI agents transacting autonomously. In May, Coinbase and Cloudflare introduced the x402 online payments protocol, and it hit escape velocity in October after a 10,000% surge. Artemis reports that around 3.25 million of the 17.1 million transactions on Base on Nov. 17 used the x402 protocol.

The AI agent payment protocol references the “HTTP 402 Payment Required” status code, which was reserved for future use when technology improved back in the 1990s. It’s designed to let AI agents with a crypto wallet pay for any API without signing up. It can be used to access data, cloud, compute, or content, without human intervention. (Humans can use it too, but account for a very small fraction of volume.) a16z forecasts autonomous AI payments like these could reach $30 trillion by 2030.

Can Grok improve patents after one look?

Eccentric but forever interesting AI commentator Brian Roemmele has been feeding old patents to Grok Imagine to see what happens.

“Grok analyzed the 1890 Thomas Edison lightbulb patent. Determined a better filament design and lit up the light,” he posted excitedly.

“This emergent intelligence is found in no other AI model.”

Roemmele also claimed Grok had “corrected” a bicycle patent from 1890 “to be better engineered.”

The obvious rebuttal to this idea is to point out that Grok has been trained on every subsequent development and patent relating to lightbulb and bicycle technology in the past century, and was simply regurgitating that information. But Roemmele said he’d uploaded the same patents to 17 other models, none of which was able to perform a similar feat. Roemmele believes Grok has an “understanding (of) the underlying mechanics and physics of patents.”

“The emergent intelligence is to be able to understand exactly what this pattern is, how it works and to extrapolate upgrades to the original patents …. Think deeper and think about the fact that just one image was uploaded with no instructions and this is what was created.”

Wow!

Grok analyzed the 1890 Thomas Edison lightbulb patent. Determined a better filament design and lit up the light.

This emergent intelligence is found in no other AI model.

It is fascinating and portends to the ability to not only change education but allow robots to build! https://t.co/oi1OOhvYDt pic.twitter.com/lJpD2IY4Gh

— Brian Roemmele (@BrianRoemmele) November 17, 2025

AI agents complete 1M tasks in order without an error

If AI development stopped dead tomorrow, we could probably spend a couple of decades figuring out better ways to use the AI tech already invented.

In the last edition of AI Eye, we reported on research that found AI agents can’t complete 97% of tasks on Upwork, much less do them better than a human can. One huge problem is that hallucination rates mean that the more steps they need to complete, the more likely it is that things will go wrong. An error rate of 1% compounds to a 63% chance of error by the 100th step.

But the authors of “Solving a Million-Step LLM Task with Zero Errors” have devised a workaround. An “architect” agent splits a problem up into the tiniest possible pieces, which the researchers call “Maximal Agentic Decomposition”.

A group of cheap micro agents then propose solutions to each step, and then vote on each other’s solutions, neatly sidestepping the small chance of an error from any one of the AIs. The method was tested using the Towers of Hanoi reasoning benchmark, and the micro agents were able to make 1,048,575 perfect moves.

Citation: I made it up

If you use AI for research, you’ll be familiar with clicking through a cited link to verify a vital detail and discovering that the bot has completely fabricated the information.

A new study in JMIR Mental Health found that one in five (19.9%) of all citations generated by GPT-4o across simulated literature reviews were entirely fabricated. Even among the real citations, 45.4% contained bibliographic errors. The more niche the area of mental health, the more fake citations, with 29% made-up citations on the topic of body dysmorphic disorder, but just 6% for major depressive disorder.

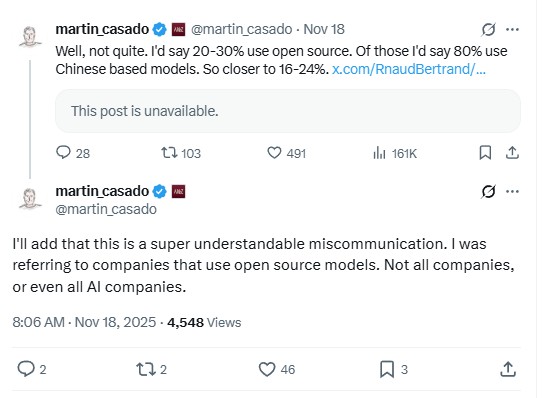

80% … of 20% of startups are using Chinese open source models

There was considerable excitement this week on social media over Andreessen Horowitz partner Martin Casado’s apparent claim that 80% of startups pitching to the firm are now using open-source Chinese AI models.

With DeepSeek reportedly costing 214 times less per token than OpenAI, the factoid was taken as a damning indictment of the US’s expensive fixation on AGI over China’s focus on developing practical applications for the tech.

“This tells you the real constraint in AI was never capability. Chinese models are matching GPT-4 on coding benchmarks while costing 2% as much. The constraint was always burn rate, and China solved it first by optimizing for efficiency instead of chasing AGI,” wrote AI commentator Askah Gupta.

Harris “Kuppy” Kupperman, chief investment officer of Praetorian Capital, asked: “At what point do we all admit that the US version of AI has been a massive misallocation of capital and resources?”

While these arguments may turn out to be true, the factoid was not. Casado later clarified that the “80% Chinese” figure was specific to the minority of startups that use open source models, and not the 70% to 80% of startups using the big commercial models like ChatGPT or Claude.

As a result, the real figure was closer to “16-24%” startups pitching a16z are using Chinese open source models.

Bias is in the eye of the beholder

Anthropic has used Claude Sonnet 4.5 to evaluate Claude Sonnet 4.5’s political bias and found that Claude Sonnet 4.5 is actually very, very even-handed.

The research follows a similar exercise by OpenAI in October, which estimated that just 0.01% of all ChatGPT responses show any signs of political bias.

Google says the AI bubble bursting would hurt everyone

Google CEO Sundar Pichai has conceded the current wave of AI investment is “extraordinary” and acknowledged that “elements of irrationality” in the stock market faintly echo the dot-com bubble.

But Pichai said Google would be able to weather the storm if the AI bubble burst: “I think no company is going to be immune, including us.”

He also said the immense amount of energy required for Google’s AI infrastructure build means it’s pushing back its net-zero targets.

Andrew Fenton

US crypto bills on the move, Worldcoin launches and Russia’s CBDC: Hodler’s Digest, July 23-29

Crypto legislation goes to the House floor in the U.S., Worldcoin’s controversial launch and Russia’s digital ruble signed into law.

Read moreSBF trial underway, Mashinsky trial set, Binance’s market share shrinks: Hodler’s Digest, Oct. 1-7

Sam Bankman-Fried trial is underway, Alex Mashinsky trial data is set, and Binance’s market share shrinks.

Read moreWeb3 games shuttered, Axie Infinity founder warns more will ‘die’: Web3 Gamer